Domenico StefaniResearch and Coding

,,

`7MM"""Mq. `7MM

MM `MM. MM

MM ,M9 .gP"Ya ,pP"Ybd .gP"Ya ,6"Yb. `7Mb,od8 ,p6"bo MMpMMMb.

MMmmdM9 ,M' Yb 8I `" ,M' Yb 8) MM MM' "'6M' OO MM MM

MM YM. 8M"""""" `YMMMa. 8M"""""" ,pm9MM MM 8M MM MM

MM `Mb.YM. , L. I8 YM. , 8M MM MM YM. , MM MM

.JMML. .JMM.`Mbmmd' M9mmmP' `Mbmmd' `Moo9^Yo.JMML. YMbmd'.JMML JMML.

My research is about Machine Learning for music, sound processing for music, and

real-time auto processing + Music Information Retrieval (MIR) on small resource-constrained devices.

I am interested in both algorithmic and Machine Learning approaches to Music processing that can support artistic expression through music, and end up in the hands of musicians.

Some of the cool projects I have worked on recently are:

- Improving efficiency of a convolution-based Ambisonics Spatial Audio Plugin (paper)

- Cross-circuit neural effect modeling (paper)

- An AI agent improvising with a human player in a duet (paper)

- Assessing the importance of accurate onset labels for real-time Music Information Retrieval (paper)

- Brain-controlled audio effect chains (paper)

For my PhD I focused on smart-musical instrument, which are musical instruments can be designed to recognize certain high-level traits or properties from a music signal, such as the expressive techniques used or the mood of the music.

Improved Real-Time Six-degrees-of-freedom Dynamic Auralization Through Non-uniformly Partitioned Convolution link

Domenico Stefani, Marco Binelli, Angelo Farina, Luca Turchet

Journal of the Audio Engineering Society (JAES)Abstract

BibTeX

@article{stefani2025improved,

author={Stefani, Domenico and Binelli, Marco and Farina, Angelo and Turchet, Luca},

journal={Journal of the Audio Engineering Society (JAES)},

title={{Improved Real-Time Six-degrees-of-freedom Dynamic Auralization Through Non-uniformly Partitioned Convolution}},

year={2025},

volume={73},

issue={10},

pages={671-681},

month={october},

doi={10.17743/jaes.2022.0224},

url={https://doi.org/10.17743/jaes.2022.0224},

}

A Decoupled VR and Real-time Audio System for Distributed Musical Collaboration link

Alberto Boem, Ovidiu Costin Andrioaia, Domenico Stefani, Alessandra Micalizzi, Luca Turchet

International Symposium on the Internet of Sounds (IS2), L'Aquila, Italy, 2025BibTeX

@inproceedings{boem2025decoupled,

author={Boem, Alberto and Andrioaia, Ovidiu Costin and Stefani, Domenico and Micalizzi, Alessandra and Turchet, Luca},

booktitle={2025 IEEE 6th International Symposium on the Internet of Sounds (IS2)},

title={A Decoupled VR and Real-time Audio System for Distributed Musical Collaboration},

year={2025},

volume={},

number={},

pages={1-10},

doi={10.1109/IS264627.2025.11284637},

address = "L'Aquila, Italy"

}

Connecting MIDI Interfaces to the Musical Metaverse link

Gregorio Andrea Giudici, Domenico Stefani, Alberto Boem, Luca Turchet

International Symposium on the Internet of Sounds (IS2), L'Aquila, Italy, 2025BibTeX

@inproceedings{giudici2025connecting,

author={Giudici, Gregorio Andrea and Stefani, Domenico and Boem, Alberto and Turchet, Luca},

booktitle={2025 IEEE 6th International Symposium on the Internet of Sounds (IS2)},

title={Connecting MIDI Interfaces to the Musical Metaverse},

year={2025},

volume={},

number={},

pages={1-9},

doi={10.1109/IS264627.2025.11284611},

address = "L'Aquila, Italy"

}

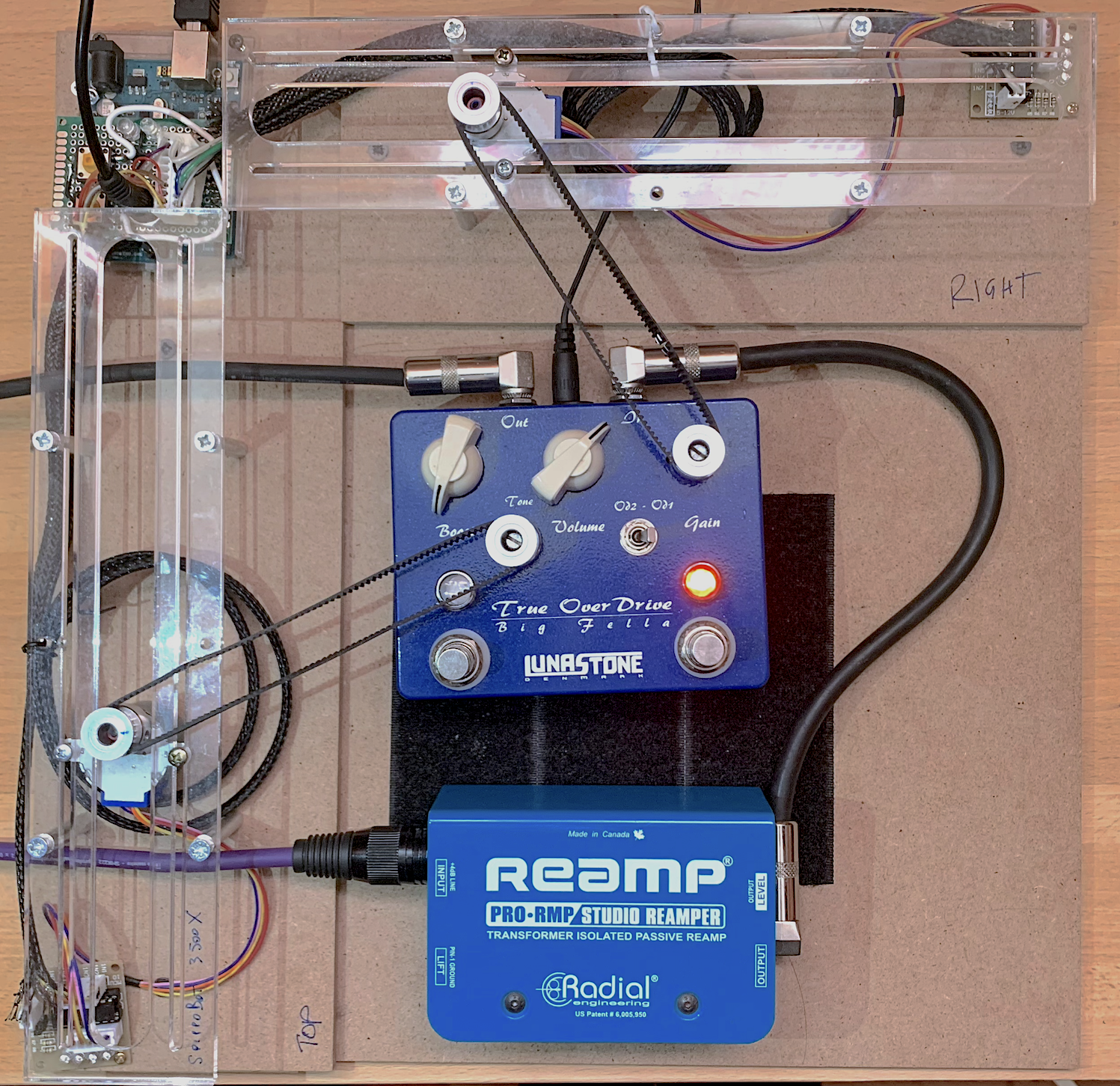

Morphdrive: Latent Conditioning For Cross-Circuit Effect Modeling And A Parametric Audio Dataset Of Analog Overdrive Pedals link

Francesco Ardan Dal Rì, Domenico Stefani, Luca Turchet, Nicola Conci

Proceedings of the 28-th Int. Conf. on Digital Audio Effects (DAFx25)Abstract

BibTeX

@inproceedings{dalri2025morphdrive,

author = "Dal R{\`i}, Francesco A. and Stefani, Domenico and Turchet, Luca and Conci, Nicola",

title = "{Morphdrive: Latent Conditioning For Cross-Circuit Effect Modeling And A Parametric Audio Dataset Of Analog Overdrive Pedals}",

booktitle = "Proceedings of the 28-th Int. Conf. on Digital Audio Effects (DAFx25)",

location = "Ancona, Italy",

editor = "Gabrielli, L. and Cecchi, S.",

year = "2025",

month = "Sept",

publisher = "",

pages = "23--30",

issn = "2413-6689",

}

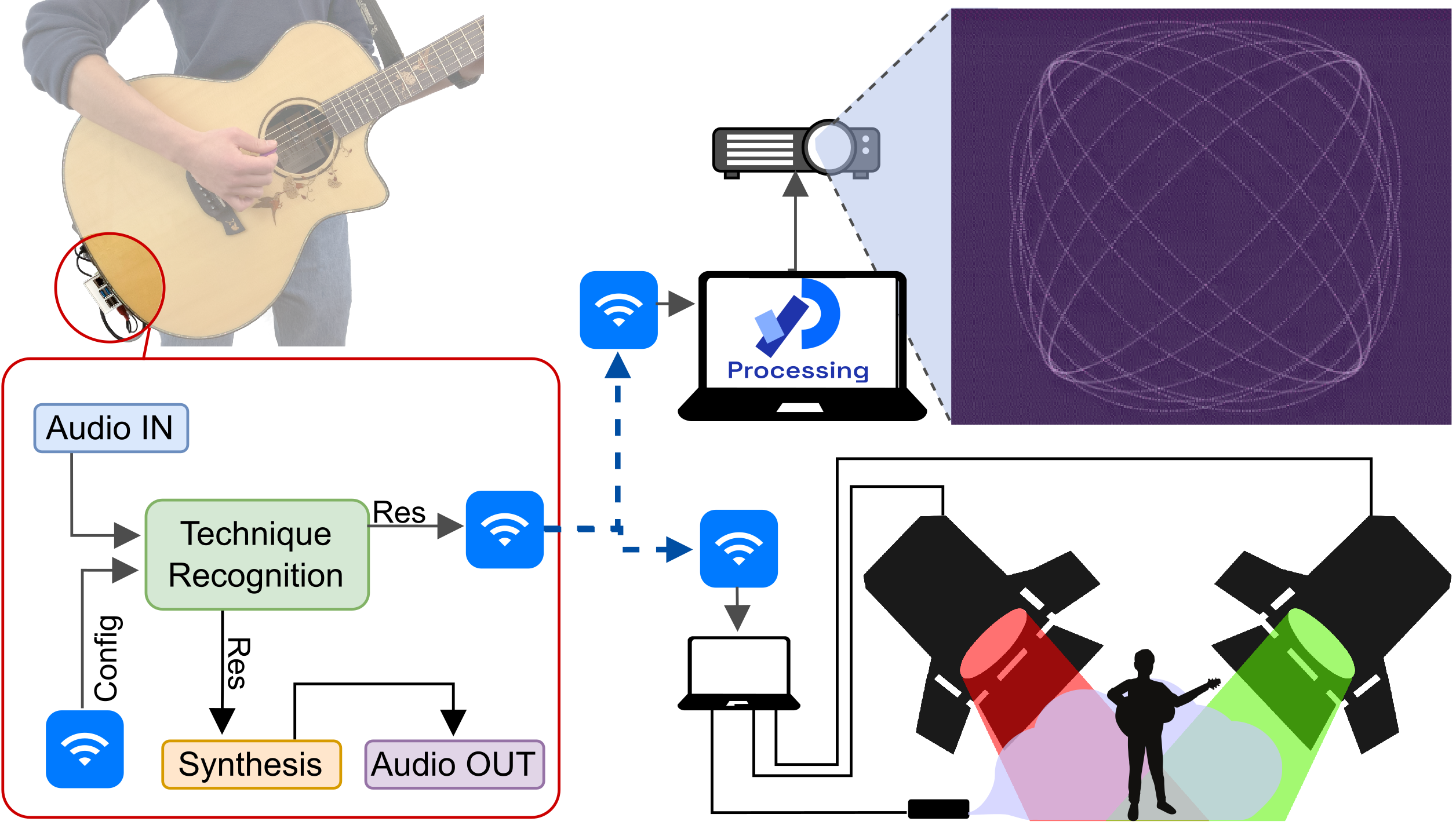

Real-Time Playing Technique Recognition Embedded in a Smart Acoustic Guitar link

Domenico Stefani, Luca Turchet

EURASIP Journal on Audio, Speech, and Music Processing

Abstract

BibTeX

@article{stefani2025realtime,

author={Stefani, Domenico

and Turchet, Luca},

title={Real-time playing technique recognition embedded in a smart acoustic guitar},

journal={EURASIP Journal on Audio, Speech, and Music Processing},

year={2025},

month={Jul},

day={17},

volume={2025},

number={1},

pages={28},

abstract={The integration of real-time music information retrieval techniques into musical instruments is a crucial step towards smart musical instruments that can reason about the musical context. This paper presents a real-time guitar playing technique recognition system for a smart electro-acoustic guitar. The proposed system comprises a software recognition pipeline running on a Raspberry Pi 4 and is designed to listen to the guitar's audio signal and classify each note into eight playing techniques, both pitched and percussive. Real-time playing technique information is used in real-time to allow the musician to control wirelessly-connected stage equipment during performance. The recognition pipeline includes an onset detector, feature extractors, and a convolutional neural classifier. Four pipeline configurations are proposed, striking different balances between accuracy and sound-to-result latency. Results show how optimal performance improvements occur when latency constraints are increased from 15 to 45 ms, with performance varying between pitched and percussive techniques based on available audio context. Our findings highlight the challenges of generalization across players and instruments, demonstrating that accurate recognition requires substantial datasets and carefully selected cross-validation strategies. The research also reveals how individual player styles significantly impact technique recognition performance.},

issn={1687-4722},

doi={10.1186/s13636-025-00413-6},

url={https://doi.org/10.1186/s13636-025-00413-6}

}

A Virtual Reality Interface for the Creation of 3D Spatial Audio Trajectories link

Matteo Tomasetti, Bavo Van Kerrebroeck, Marcelo M. Wanderley, Domenico Stefani, and Luca Turchet

Journal of the Audio Engineering SocietyAbstract

BibTeX

@article{tomasetti2025sonospatia,

author = {Tomasetti, Matteo and Van Kerrebroeck, Bavo and Wanderley, Marcelo M. and Stefani, Domenico and Turchet, Luca},

title = {{A Virtual Reality Interface for the Creation of 3D Spatial Audio Trajectories}},

journal = {Journal of the Audio Engineering Society},

doi = {10.17743/jaes.2022.0215},

url = {http://dx.doi.org/10.17743/jaes.2022.0215},

year={2025},

volume={73},

issue={7/8},

pages={481-492},

month={July}

}

Musician-AI partnership mediated by emotionally-aware smart musical instruments link

Luca Turchet, Domenico Stefani, Johan Pauwels

International Journal of Human-Computer Studies

Abstract

BibTeX

@article{turchet2024musicianai,

title = {Musician-AI partnership mediated by emotionally-aware smart musical instruments},

journal = {International Journal of Human-Computer Studies},

volume = {191},

pages = {103340},

year = {2024},

issn = {1071-5819},

doi = {https://doi.org/10.1016/j.ijhcs.2024.103340},

url = {https://www.sciencedirect.com/science/article/pii/S107158192400123X},

author = {Luca Turchet and Domenico Stefani and Johan Pauwels},

keywords = {Music information retrieval, Music emotion recognition, Smart musical instruments, Transfer learning, Context-aware computing, Trustworthy AI},

}

Esteso: Interactive AI Music Duet Based on Player-Idiosyncratic Extended Double Bass Techniques link

Domenico Stefani. Matteo Tomasetti, Filippo Angeloni and Luca Turchet

In Proceedings of the International Conference on New Interfaces for Musical Expression (NIME'24), Utrecht, The Netherlands.

Abstract

BibTeX

@inproceedings{stefani2024esteso,

address = {Utrecht, Netherlands},

articleno = {72},

author = {Domenico Stefani and Matteo Tomasetti and Filiippo Angeloni and Luca Turchet},

booktitle = {Proceedings of the International Conference on New Interfaces for Musical Expression},

doi = {10.5281/zenodo.13904929},

editor = {S M Astrid Bin and Courtney N. Reed},

issn = {2220-4806},

month = {September},

numpages = {9},

pages = {490--498},

presentation-video = {https://youtu.be/mdb2Tlh4ub8?si=0m-6kqA_a_p-c2-z},

title = {Esteso: Interactive AI Music Duet Based on Player-Idiosyncratic Extended Double Bass Techniques},

track = {Papers},

url = {http://nime.org/proceedings/2024/nime2024_72.pdf},

year = {2024}

}

On the Importance of Temporally Precise Onset Annotations for Real-Time Music Information Retrieval: Findings from the AG-PT-set Dataset link

Domenico Stefani, Gregorio A. Giudici, Luca Turchet

In Proceedings of the 19th International Audio Mostly Conference (AM'24)

Abstract

BibTeX

@inproceedings{stefani2024importance,

author = {Stefani, Domenico and Giudici, Gregorio Andrea and Turchet, Luca},

title = {On the Importance of Temporally Precise Onset Annotations for Real-Time Music Information Retrieval: Findings from the AG-PT-set Dataset},

year = {2024},

isbn = {9798400709685},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3678299.3678325},

doi = {10.1145/3678299.3678325},

booktitle = {Proceedings of the 19th International Audio Mostly Conference: Explorations in Sonic Cultures},

pages = {270–284},

numpages = {15},

keywords = {Audio Processing, Music Information Retrieval, Real-time},

location = {Milan, Italy},

series = {AM '24}

}

BCHJam: a Brain-Computer Music Interface for Live Music Performance in Shared Mixed Reality Environments link

M. Romani, G.A. Giudici, D. Stefani, D. Zanoni, A. Boem and L.Turchet

in Proceedings of the 5th International Symposium on the Internet of Sounds (IS2), Erlangen, Germany

Abstract

This paper won the Best Student Paper award

BibTeX

@inproceedings{romani2024bchjam,

author={Romani, Michele and Giudici, Gregorio Andrea and Stefani, Domenico and Zanoni, Devis and Boem, Alberto and Turchet, Luca},

booktitle={2024 IEEE 5th International Symposium on the Internet of Sounds (IS2)},

title={{BCHJam:} a Brain-Computer Music Interface for Live Music Performance in Shared Mixed Reality Environments},

year={2024},

volume={},

number={},

pages={1-9},

keywords={Headphones;Instruments;Ecosystems;Music;Mixed reality;Visual effects;Brain-computer interfaces;Internet;Wireless fidelity;Tuning;Brain-computer interfaces;Mixed Reality;Performance Ecosystem;Internet of Musical Things},

doi={10.1109/IS262782.2024.10704087}

}

PhD Thesis "Embedded Real-time Deep Learning for a Smart Guitar: A Case Study on Expressive Guitar Technique Recognition" link

Abstract

BibTeX

@phdthesis{stefani2024thesis,

author = {Stefani, Domenico},

number = {2024},

school = {University of Trento},

title = {Embedded Real-time Deep Learning for a Smart Guitar: A Case Study on Expressive Guitar Technique Recognition},

year = {2024},

doi = {10.15168/11572_399995},

}

Real-Time Embedded Deep Learning on Elk Audio OS link

in Proceedings of the 4th International Symposium on the Internet of Sounds (IS2), Pisa, Italy

Abstract

BibTeX

@inproceedings{stefani2023realtime,

author={Stefani, Domenico and Turchet, Luca},

booktitle={4th International Symposium on the Internet of Sounds (IS2)},

title={Real-Time Embedded Deep Learning on Elk Audio OS},

year={2023},

volume={},

number={},

pages={21-30},

doi={10.1109/IEEECONF59510.2023.10335204}

}

A Comparison of Deep Learning Inference Engines for Embedded Real-time Audio Classification link

in Proceedings of the 25-th Int. Conf. on Digital Audio Effects (DAFx20in22)

Abstract

BibTeX

@inproceedings{stefani2022comparison,

author = "Stefani, Domenico and Peroni, Simone and Turchet, Luca",

title = "{A Comparison of Deep Learning Inference Engines for Embedded Real-Time Audio Classification}",

booktitle = "Proceedings of the 25-th Int. Conf. on Digital Audio Effects (DAFx20in22)",

location = "Vienna, Austria",

eventdate = "2022-09-06/2022-09-10",

year = "2022",

month = "Sept.",

publisher = "",

issn = "2413-6689",

volume = "3",

doi = "",

pages = "256--263"

}

On the challenges of embedded real-time music information retrieval link

in Proceedings of the 25-th Int. Conf. on Digital Audio Effects (DAFx20in22)

Abstract

BibTeX

@inproceedings{stefani2022challenges,

author = "Stefani, Domenico and Turchet, Luca",

title = "{On the Challenges of Embedded Real-Time Music Information Retrieval}",

booktitle = "Proceedings of the 25-th Int. Conf. on Digital Audio Effects (DAFx20in22)",

location = "Vienna, Austria",

eventdate = "2022-09-06/2022-09-10",

year = "2022",

month = "Sept.",

publisher = "",

issn = "2413-6689",

volume = "3",

doi = "",

pages = "177--184"

}

Bio-Inspired Optimization of Parametric Onset Detectors link

in Proceedings of the 24th International Conference on Digital Audio Effects (DAFx20in21), 2021

Abstract

BibTeX

@inproceedings{stefani2021bioinspired,

author = "Stefani, Domenico and Turchet, Luca",

title = "{Bio-Inspired Optimization of Parametric Onset Detectors}",

booktitle = "Proc. 24th Int. Conf. on Digital Audio Effects (DAFx20in21)",

location = "Vienna, Austria",

eventdate = "2021-09-08/2021-09-10",

year = "2021",

month = "Sept.",

publisher = "",

issn = "2413-6689",

volume = "2",

pages = "268--275",

doi={10.23919/DAFx51585.2021.9768293}

}

More details on the talks at www.domenicostefani.com/talks

Towards Explainable Music Emotion Recognition for Guitar Improvisation link

Michele Rossi, Domenico Stefani, Johan Pauwels, Giovanni Iacca, Luca Turchet

International Symposium on the Internet of Sounds (IS2), L'Aquila, Italy, 2025BibTeX

@inproceedings{rossi2025towards,

author={Rossi, Michele and Stefani, Domenico and Pauwels, Johan and Iacca, Giovanni and Turchet, Luca},

booktitle={2025 IEEE 6th International Symposium on the Internet of Sounds (IS2)},

title={Towards Explainable Music Emotion Recognition for Guitar Improvisations},

year={2025},

volume={},

number={},

pages={1-5},

doi={10.1109/IS264627.2025.11284553},

address = "L'Aquila, Italy"}

Talk: Artificial Intelligence as a Creative Partner in Music link

SignalOff Festival 2025, Le Garage Lab, Trento, Italy

Abstract

Demo of Esteso: an AI Music Duet Based on Extended Double Bass Techniques link

Dictionary for Multidisciplinary Music Integration (DIMMI) Trento, November 29-30, 2024

This demo won the Best Demonstration award

Abstract

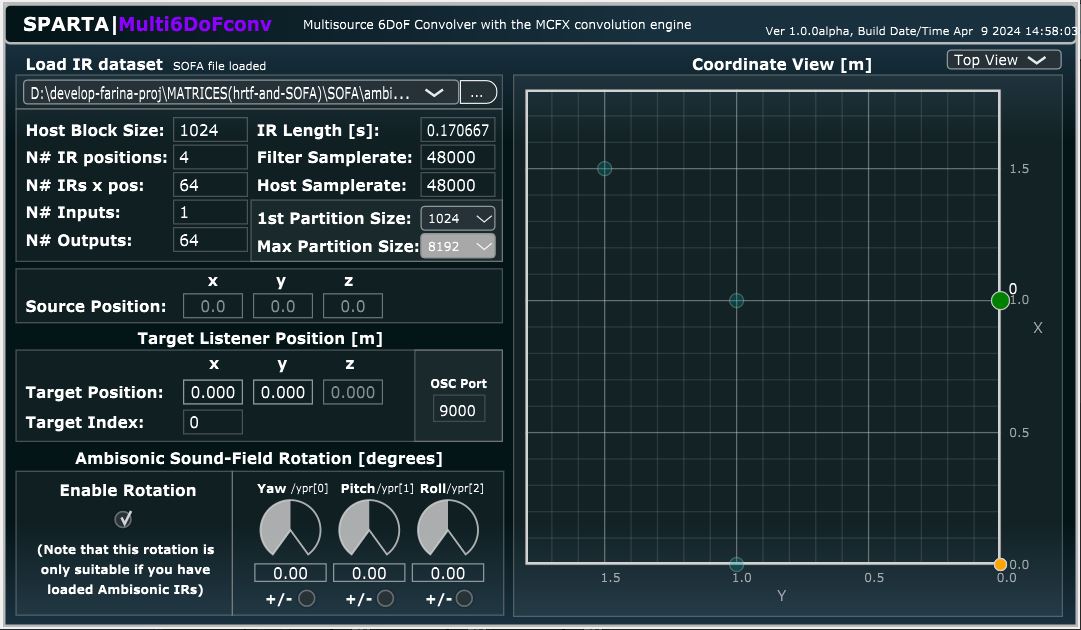

“Engine-Swap” on Two Spatial Audio Plugins Will Be Easy, Right? Lessons Learned link

Talk at the Audio Developer Conference 2024, 11-13 Nov 2024, Bristol UK

Abstract

Yes, spatial audio is complex, but these are two similar JUCE plugins and I can just swap the core code components, it will be easy, right?

It wasn't, and it uncovered many unexpected challenges and learning opportunities.

In this talk, I will share my experience of improving an existing spatial audio plugin (SPARTA 6DoFConv) by replacing its convolution engine with a more efficient alternative. This process required deep dives into complex and sparsely commented audio DSP code and problem-solving.

The core of this presentation will focus on the general learning points from this endeavor. I will discuss some of the strategies I employed to understand and navigate complex codebases and the practical steps taken to embed a new convolution engine in an audio plugin. Additionally, I will explore the unforeseen issues that arose, such as dealing with the drawbacks of highly optimized algorithms and integrating a crossfade system, compromising between efficiency and level of integration.

This talk aims to provide valuable insights for developers, especially those who are starting out and want to start understanding and customizing other people's code. Join me in exploring the lessons learned, strategies employed, and trade-offs considered in creating a more efficient six-degrees-of-freedom spatial audio plugin.

Key Points:

• Addressing challenges with optimized algorithms and accepting tradeoffs;

• General lessons learned and best practices when working with other people's plugin code;

• Practical knowledge of different multichannel convolution engines for Ambisonics reverberation and 6 degrees-of-freedom navigation for extended reality applications.

Demo: Real-Time Embedded Deep Learning on Elk Audio OS link

in 4th International Symposium on the Internet of Sounds (IS2), Pisa, Italy

Abstract

Riconoscimento in Tempo Reale di Tecniche Espressive per Chitarra su Embedded Computers link

in Proceedings of the XXIII Colloquio di Informatica Musicale/Colloquium of Musical Informatics (CIM), Ancona, Italy

🇬🇧 Abstract

🇮🇹 Abstract

Workshop Talk: Embedded Real-Time Expressive Guitar Technique Recognition link

in Embedded AI for NIME: Challenges and Opportunities Workshop

Demo of the TimbreID-VST Plugin for Embedded Real-Time Classification of Individual Musical Instrument Timbres link

in Proceedings of the 27th Conference of the Open Innovations Association (FRUCT), 2020

Abstract

BibTeX

@inproceedings{stefani2020demo,

title={Demo of the TimbreID-VST Plugin for Embedded Real-Time Classification of Individual Musical Instruments Timbres},

author={Stefani, Domenico and Turchet, Luca},

booktitle={Proc. 27th Conf. of Open Innovations Association (FRUCT)},

volume={2},

pages={412-413},

year={2020}

}